The perils and pitfalls of making AI speak like humans

In June this year, a Google engineer made a bombshell claim: the Artificial Intelligence (AI) system he had been working on had become sentient – it had become aware of its own existence and begun expressing emotions and feelings. Google summarily dismissed his claims and fired the employee, but the incident once again brought into sharp focus the ethical conundrums surrounding the development of AI.

What was particularly interesting in this case was that the project was part of Google’s LaMDA (Language Model for Dialogue Applications), which involves developing language models for humans to converse with AI. Instances like this just go to show how language has become the ultimate test for a machine’s intelligence. Researchers are grappling with not just the technological challenges of making AI learn languages, but also the social and moral implications of machines becoming more intelligent.

What makes something as ordinary as language (which seems so intuitive to humans) such a hard nut to crack for a computer? One obvious reason is the richness of human languages. With ambiguities, wordplays and innuendos, language is such a complex evolutionary artefact of humans that having AI master it is indeed a lofty goal to strive for.

In the 1960s, Noam Chomsky, a pioneering figure of modern linguistics, introduced the idea of a “Universal Grammar”. He posits that every one of us is born with an innate ability to understand language, and that every language has more or less the same laws.

Language is what makes us uniquely human. But can computers ever reach this pinnacle?

‘Time flies like an arrow but fruit flies like a banana’

How do we know a machine or AI is intelligent?

Alan Turing, considered the father of modern computer science, proposed an experiment back in 1950. Famously called the Turing Test, the experiment basically gives an operative definition of intelligence (which means that for a machine to be called intelligent, it simply has to pass the test). In this test, a human and a machine are kept in separate rooms and interrogated by a human interrogator whose goal is to identify which of the two ‘contestants’ is human. The interrogator can ask any kind of questions to both, and if at the end, is unable to make out which is which, this means that the machine has successfully fooled the interrogator into believing it is human. As one robotics researcher put it, an example of an absurd question to ask a machine would be: “How come time flies like an arrow but fruit flies like a banana?” Humans would be able to recognise this as a play on words, but will a machine be able to?

At its core, the test hinges on computers being able to understand natural languages. In other words, classifying a machine as intelligent does not have anything to do with its ability to crunch large numbers or do complex mathematical calculations, but solely depends on its ability to understand and process language. No machine has satisfactorily been able to pass the Turing Test, although there have been some breakthroughs here and there.

Classifying a machine as intelligent … depends on its ability to understand and process language

One such remarkable advance has been the development of Generative Pre-trained Transformer-3 (GPT-3). GPT-3 is a neural network-based model that spits out sentences in human languages with very minimal input. Neural networks are mathematical models that mimic the operation of the human brain’s neuronal circuitry. Just like how neurons fire in the brain at different rates, driving specific functions, neural networks accept inputs that mimic “high” or “low” voltages, which in turn pass through various hidden layers (of other neurons), and activate other circuitry, eventually producing a desired output.

GPT-3 has been heralded as a marvellous feat of engineering, for it has 175 billion parameters – essentially its building blocks – using text found on the internet. The sentences it generates are therefore very realistic, just like one would find on a blog or webpage. Researchers have used it for a plethora of tasks such as summarising a long article, generating questions on a topic given an article, writing program code, even scripts for movies.

“There is enormous potential here,” says Hemavati D, a PhD student in the Department of Computer Science and Automation (CSA). “What makes something like GPT-3 possible today is the advent of technology like GPUs (Graphics Processing Units) that have massive parallelism and can harness so much power. To operate on 175 billion parameters is no joke.”

“Thanks in large part to Deep Learning, there has been significant progress in language processing over the last few years,” explains Partha Pratim Talukdar, Associate Professor (on leave) at the Department of Computational and Data Sciences (CDS), IISc. “Earlier, one had to spend considerable effort at identifying features that could be considered relevant. But over the last decade, Deep Learning has made it possible for it to learn the features on its own.”

He explains these features with an interesting example: Suppose you give an image to a machine and ask it to learn to classify whether the image contains a cat or a dog. Earlier, one had to manually identify the relevant features that would distinguish one from the other, and provide those features to the AI – for example, if the image contains pointy ears and has whiskers, it is a cat. “Now, with the advent of Deep Learning, we simply provide the images which are labelled as either cat or dog, and [the AI] learns on its own as to what are the relevant features that make the classification possible. We don’t provide the features anymore,” he explains. “Of course, we can’t make sense of the features that it uses to make the classification, in the sense that they are not intelligible to us, but it works and is successful at downstream tasks. The same principle has been applied to sentences. GPT-3 has been trained likewise on huge amounts of text and it figures out the features on its own as to which pieces of text make for good stories and plots, and other things. As a more recent example, there is PaLM, which has demonstrated tremendous capabilities in numeric reasoning, joke explanation, common sense reasoning and code completion among other things.”

Most recently, yet another novel AI tool has been released that many are heralding as a breakthrough – ChatGPT, a prototype chatbot developed by a company called OpenAI, who also developed GPT-3. A user can engage in detailed conversations with ChatGPT about almost anything. For instance, a user could type in that they are experiencing some symptoms of cold, and as a response ChatGPT could ask a few follow-up questions, in much the same way a doctor does, and eventually after engaging in a dialogue, it could even provide a diagnosis and suggest medicines. And many users have reported that the text, syntax and format are uncannily human-like.

Responding like a doctor is not the only thing the chatbot can do – one could engage in dialogues with ChatGPT on any topic whatsoever, because after all, the internet (on which it has been trained) has tons of information on every topic. But having the information out there on the internet is one thing, and being able to engage in conversation the way a human does is another ball game altogether. Which is why training the chatbot involved human AI trainers who provided conversations playing both roles – the user as well as the AI. And from this, over multiple iterations, ChatGPT learnt the nuances of conversations, and became capable of writing computer code, jokes, even essays and short stories based on imaginary prompts from users.

Challenges and concerns

Generating short stories may seem relatively harmless, but things can take a turn if such AI is used for more serious tasks like writing a research paper – which is what Almira Osmanovic Thunström, a researcher at the Institute of Neuroscience and Physiology at the University of Gothenburg did. Not just any research paper, but a research paper on GPT-3 itself (which she then submitted to a peer-reviewed journal). Such experiments can open up a can of worms, both ethically and legally. The irony is that GPT-3 concluded the paper with the words: “Overall, we believe that the benefits of letting GPT-3 write about itself outweigh the risks. However, we recommend that any such writing be closely monitored by researchers in order to mitigate any potential negative consequences.”

Viraj Kumar, Visiting Professor at the Kotak-IISc AI-ML Centre, gives another example. The same principles that enable GPT-3 to generate human language sentences can also allow AI to generate program code. “There is a tool called Github Copilot which, given a prompt, automatically generates code for that prompt,” he says. “Now as an educator, do I neglect it, or do I police it, and where do I draw the line? How do I give programming assignments to students?”

Another challenge with building such AI is that if the data that is used to train the model has in-built biases, they become imbibed into the AI as well. For instance, owing to the fact that it was trained on text from the whole internet, GPT-3 ended up learning racist biases. For example, it was found to mention violence in the context of Jews, Buddhists and Sikhs once, twice for Christians, but nine times out of ten for Muslims. But by injecting positive text about Muslims in the language model, the mention of violence reduced by about 40%. “One of the ways of mitigating bias is to build models on more representative data,” says Partha.

If the data that is used to train the model has in-built biases, they become imbibed into the AI as well

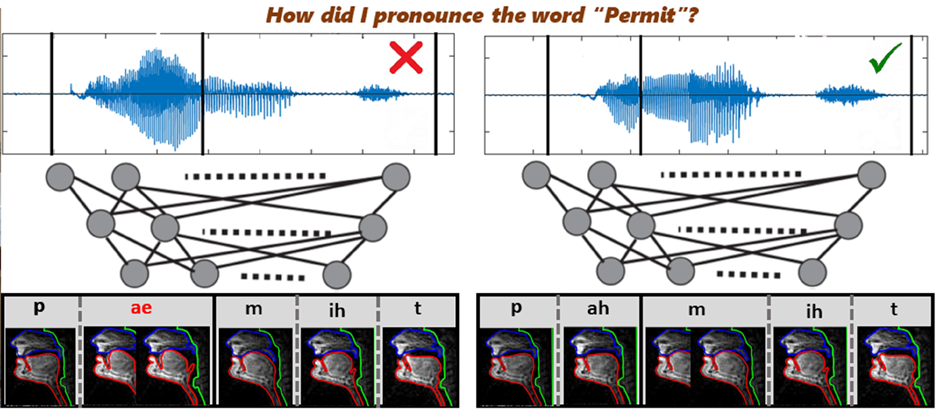

Speech processing is yet another challenge. Unlike written text, there is also a sequence of sounds associated with every sentence that is spoken. “Every language has its own unique characteristics in terms of sounds and the sequence of sounds, which will finally link to the words, the letters and the sentence. When somebody speaks a particular language, the sequence of sounds will be different compared to another language, unless these two languages are very similar,” explains Prasanta Kumar Ghosh, Associate Professor at the Department of Electrical Engineering (EE), IISc. “What the model will need to learn is beyond just words – the sequence of the sounds too.

Although it is similar to language processing, one major difference is that there is no white space in speech – like punctuation – unlike written text, which implies that any AI attempting to understand speech has to understand where a word or a sentence ends. To circumvent this issue, Prasanta explains, the AI is not trained using individual sounds for words, but instead it is trained using speech waveforms of running speech which present a sequence of sounds from different words.

Avoiding selective output is also a challenge, he adds, because if one collects data only from, say, Bengali speakers, the AI ends up learning only those contexts, metaphors and other biases built into that language.

Both Partha and Prasanta strongly advocate training and developing AI using Indian datasets, because most of the AI models we have today have been trained on datasets in the USA, UK or Europe, which may not be as representative of nuances in the Indian context.

These advances in AI are also happening in the backdrop of the widening digital divide in society. “Yes, it [AI] can do great things. But one must be aware that not everyone might have access to such technology – for instance schools with low funding may not have access to devices,” explains Viraj. “So there is every risk that the best AI might go to those who can afford it, increasing the gulf between the ‘haves’ and the ‘have-nots.'”

Soma Biswas, Associate Professor at the Department of Electrical Engineering, IISc, says, “As much as one could use AI for good, one could also misuse such technology for nefarious purposes, like deepfake technology.” Deepfake generates realistic photos and videos of people. One could therefore use it for generating misinformation. Awareness of the perils and pitfalls of AI-based technologies among the general public is also important. “The researchers who work on these technologies need to have an ethical mindset,” says Soma.

There are, therefore, many, many questions that are unresolved. Should we allow the use of AI in creative endeavours like art or even academic writing, and if so, should we be allowed to name the AI as the author or creator? Incidentally, what pronoun or gender do we assign to the AI? Can AI ever really become sentient at some point in the future, like the Google engineer claimed?

Finally, how do you know whether the article you have just read was written by AI or not?

Ullas A is a PhD student in the Department of Computer Science and Automation and a science writing intern at the Office of Communications, IISc