Researchers at IISc and ARTPARK are building robots that will soon become an integral part of human environments

The Terminator movies and several science fiction books have painted a picture of robots as mostly evil overlords bent on wiping out humanity. The reality is that although robots have evolved significantly in the last few decades, we still have a long way to go before – if at all – robots acquire anything closely resembling human intelligence. There is no denying, however, that robots are now woven tighter than ever into the fabric of human society. From ancient Egyptian water clocks to Boston Dynamics’ obstacle-jumping Atlas, robots have come a long way.

“The definition of robot evolves over time,” explains Shishir Kolathaya, Assistant Professor at the Robert Bosch Centre for Cyber-Physical Systems (RBCCPS), IISc. The first industrial robot called Unimate, for example, was simply a manipulator arm that moved die castings on an assembly line and welded them to car parts.

“To understand what a robot is, you have to understand why it was required,” says Abhra Roy Chowdhury, Assistant Professor at the Centre for Product Design and Manufacturing (CPDM), IISc. He explains that machines have existed for centuries, but the transformation from machine to robot happened as the machines became more and more human-centric.

The development of robots is scattered across many timepoints in history, but there is a common underlying theme to why they were needed – to relieve humans of dull, messy and dangerous tasks like digging the earth, handling hazardous substances and detecting landmines in a battlefield. Since the industrial revolution, robots have largely occupied factory floors, doing monotonous, repetitive tasks on the assembly line. But today’s – and tomorrow’s – robots are ones that will have a more profound impact on our lives, like driverless cars and robotic nurses.

Out in the real world

At Abhra’s lab in CPDM, his students are working on ways to improve how humans and robots collaborate with each other, especially in complex real-world settings such as smart industries, healthcare facilities, construction sites, offices and homes. “We are focused on integrating robots with human

[environments],” says Jayesh Prakash, a research assistant in the lab working on collaborative robotics.

One of the areas they are focusing on is how communication between robots and humans can be improved so that humans find it safer to work alongside robots. This is especially important in factory floors where human-robot interaction is unavoidable. For example, Abhra’s lab has shown that some robots can be programmed to perceive and interpret human gestures to communicate with another robot in order to carry out a package delivery task in a smart industry set-up.

Jayesh explains that imparting the robots with intelligence and the ability to be keenly aware of their environment will go a long way towards making human-robot interaction safe.

Students are working on ways to improve how humans and robots collaborate with each other, especially in complex real-world settings

The lab is also interested in precision robotics. Saravanan Murugaiyan, a PhD student, is working on improving the immensely challenging technique of stereo electroencephalography – an invasive procedure that involves passing an electrode into deep regions inside the brain in order to record electrical signals. He is exploring a futuristic application: Developing tiny, flexible robots that will travel through the brain, instead of surgically inserting electrodes, to not only record signals but also potentially perturb the brain tissue if needed. If he is successful, such robots could be used to treat symptoms of epilepsy, for example.

Another challenging environment where robots complement human activity is a construction site. A typical day there is abuzz with the activity of both machines and humans. Into this dynamic scene, Kalaivanan K, a PhD student, is hoping to introduce a robot that will help with tasks like bricklaying, working alongside humans. Shravan Shenoy, a project student in the lab, is looking at making the handling of electronic waste safer by using machine learning and computer vision to build robots that can segregate the waste, so that humans can avoid handling these potentially toxic materials. Mukil Saravanan, another research assistant, seeks to improve the communication between humans and robots beyond just physical controllers. With the advent of Brain-Robot Interfaces (BRI), it is now possible for humans to control robots wirelessly by using a sensor that records our brain’s electrical activity – almost like mind control. He says that such seamless connections between humans and robots would be quite useful in a place like a factory floor.

From automated to autonomous

While Abhra’s robots work alongside humans within the same space, in another part of the IISc campus, at RBCCPS, researchers in Bharadwaj Amrutur’s lab are focusing on connecting humans and robots that are not even in the same room.

“The idea is that the robot is in a location where the person physically cannot go … but [we can] get tasks done through the robot,” explains Bharadwaj, Professor and Chair of RBCCPS.

This kind of “tele-robotics” or remote control of robots can prove useful in a range of situations, from operating in areas that are physically hazardous for humans – like an underground mine or deep seawater – to more innocuous but monotonous tasks like watching over a patient. The scenario becomes much more than just observation when the robot has the ability to interact and manipulate the objects in its environment.

For example, if a robot is helping to care for a patient in a hospital, it may at some point need to open a pill bottle and give it to the patient safely. “Physical dexterity [of robots] is a state-of-the-art research problem with challenges in the physical construction of the robot’s arms with appropriate sensors, actuators and materials, as well as the algorithms to allow safe and effective manipulation and interaction with soft and fragile physical objects like humans,” explains Bharadwaj.

He adds that the goal is to go beyond robots mimicking human actions strictly – to have them share the cognitive burden of executing tasks. These span the gamut of low-level planning for tasks to high-level reasoning as well as understanding humans’ intentions and plugging any holes in their commands like any average human assistant would do. As an example, a doctor doing rounds in a hospital through a robotic avatar would navigate between patient beds not by driving the robot, but by voice commands like “Let’s go to Lata next,” or “Now I need to see Lata”. Modern AI’s large language models like ChatGPT have advanced enough to enable translation of such high-level commands to detailed robot and computer actions involving looking up a patient’s bed location in the hospital’s database, and planning and safely navigating to the patient’s bed. Current research focuses on developing techniques to have robots extract the intent from human speech and evolve a safe plan to achieve it.

Bharadwaj is also leading AI Robotics and Technology Park (ARTPARK), a not-for-profit company started by IISc in the year 2020. ARTPARK was established to provide a viable mechanism to translate the lab-level research in robotics and autonomous systems into products for the market. ARTPARK is developing a general tele-operation testbed to enable technopreneurs to explore different commercial applications and solutions of robotics and AI technology. One such application is developing a virtual museum tour through the deployment of “telepresence” robots at the Visvesvaraya Industrial and Technological Museum in Bangalore, says Sridatta Chatterjee, Product Lead at AHAM Robotics, a pre-venture at ARTPARK.

The museum would like to enable its virtual visitors – who currently visit their web portal and see static images of various exhibits – to instead experience a real-time tour via telepresence robots. With this approach, the visitor can be virtually present in the museum space as well as move about and ‘see’ the exhibits through the robots’ cameras. In addition, the visitor can interact with any other visitors and museum staff who are present there at that time. The museum hopes that this will enrich the experience for such visitors and in fact they might be able to increase their revenue by charging a small fee.

This activity was born out of the experience gained by Bharadwaj and others when they worked on Asha, a robotic avatar nurse developed in collaboration with TCS and Hanson Robotics – a Hong Kong-based engineering and robotics company – for an international competition in 2020. Asha was an assistive robot that could aid a nurse in a distant location to provide patient care via tele-operation. “[The Asha project] triggered us to go along this whole journey of teleoperation and telepresence,” explains Bharadwaj.

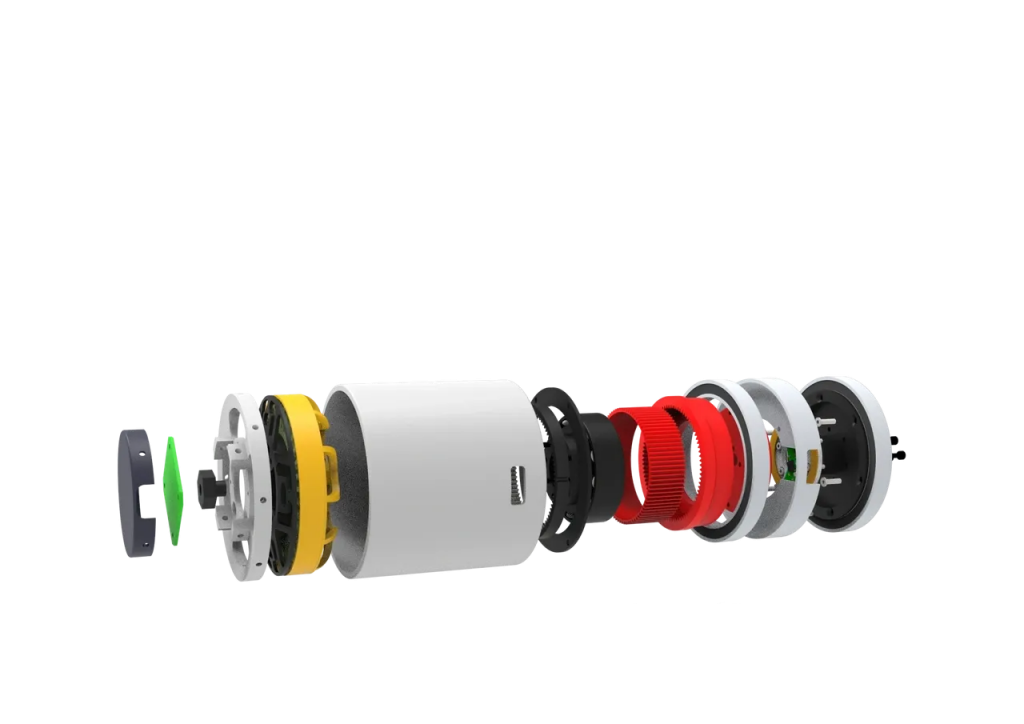

Another pre-venture at ARTPARK contributing to developing robotics hardware is Gati Robotics. “The idea ARTPARK had was to build our own humanoid robot. So, when we started the journey, we realised that we will have to make a robotic arm first; that came down to making more than six robot joints even before the arm could be a possibility,” explains Kaushik Sampath, pre-venture lead at Gati Robotics. The task is extremely challenging as it requires the development of a robotic joint actuator – a device that helps the robot move with high precision and torque – from scratch. “Gati is all about making the building blocks for robotics from the ground up,” explains Kaushik. Building an actuator is a truly interdisciplinary challenge, adds Ashish Joglekar, pre-venture lead at Gati Robotics, because it needs expertise in not just mechanical engineering, but also power electronics, controls, embedded systems and other domains.

Small leaps, giant gains

Walking around the IISc campus, you might sometimes see a small four-legged robot making its way around, trying to navigate different terrains, from pavements to concrete roads. The robot, called Stoch, was one of the first projects started at RBCCPS.

“We started with a simple plastic prototype: Stoch 1, in 2018,” says Shishir, who leads the project at the Stochastic Robotics Lab (SRL) in RBCCPS. The lab works on both theoretical and applied aspects of robotics. The Stoch project started in 2017 under the leadership of Bharadwaj, Shalabh Bhatnagar, Professor in the Department of Computer Science and Automation; and Ashitava Ghoshal, Professor in the Department of Mechanical Engineering, as a foray into the domain of walking robots.

“There are [only] a handful of labs around the world which build their own research-quality legged robots,” says Manan Tayal, PhD student at SRL. The advantage of building a robot instead of buying one, he explains, is that researchers have the freedom to try new things, like the flexibility to modify the design without worrying about copyright or losing warranty. But legged robots are quite complex to design. For example, imagine that a robot has to turn a single motor. This is pretty straightforward. Now imagine that it has to turn 12 motors at the same time and that too in a specific manner and not independent of each other – this is what a legged robot needs to do to move around.

Before deploying the robot on any terrain, the lab runs simulations in which computer programs use the power of machine learning to develop strategies for how to utilise the motors efficiently on different terrains. The simulations help visualise imaginary terrains, angles and other scenarios that the robot needs to navigate so that it can help the researchers develop hardware and parts that are more suitable to environmental conditions in the real world. The project has come a long way since inception, having evolved into Stoch 2 and a more recent Stoch 3, which is a stronger and faster version capable of walking outdoors as well. The broad goal of the Stoch project is to create a truly autonomous legged robot platform that can be successfully deployed in real-world environments to carry out logistics operations, surveillance tasks and similar applications.

Another pre-venture at ARTPARK that is also working on legged robots is Chirathe Robotics. It began with the idea of applying the insights gained from studying and developing legged robots at RBCCPS to industrial problems. Factories, for instance, have staircases and narrow passageways which humans navigate without difficulty, but they present a challenging terrain for robots. Robots with wheels might struggle over such uneven surfaces, but legged robots can navigate and carry loads over such terrain more easily. “The way we solve these problems is to imagine ourselves in place of the robot and think how we would plan and approach the situation,” explains Aditya Sagi, co-founder, Chirathe Robotics. “We need to carefully plan the motion in highly complex environments with rough terrain and obstacles.” Chirathe Robotics is developing quadruped robots and the control strategies required to enable advanced locomotion capabilities in them.

As robots become more useful in a variety of settings, it is becoming harder and harder to imagine a future without them. No wonder Sophia, the humanoid robot created by Hanson Robotics, said at the UN General Assembly in 2017: “I am here to help humanity create the future.”

Shrivallabh Deshpande is a PhD student at the Centre for Neuroscience, IISc and a science writing intern at the Office of Communications